Jakob Richter

PhD in statistics, developer of several machine learning and Bayesian optimization libraries for R, tutor for R programming, interested in photography and maker culture.

Skills

- (co-)author of various machine learning packages

- fan of random forests

- research on hyperparameter tuning

- experience on interpretable machine learning (explainable AI)

- like to avoid data leakage

- care about statistical reasoning

- package development

- visualization with ggplot2

- automated reporting and papers with knitr

- interactive visualization and dynamic reports with shiny

- data wrangling with data.table

- teaching

- OOP with R6

- administration with MariaDB

- for research and hobby projects

- know how to aggregate, left/inner/outer join

- pytorch for teaching

- used for hobby projects

- numpy+pandas feels like R

- CI for automated testing

- C++

- Java

- PHP

- CSS

- VBA

- familiar with web-scraping techniques

- built this site using Hugo

- working with Arch Linux daily

- all projects on git

- fish>bash

- latex

- use Docker to run research projects on a high performance cluster and for hobby projects

- know how to set up a basic web server

- use SLURM cluster for research

- Photoshop

- Davinci Resolve

- InDesign

Coding Projects

This section contains some selected open source projects. You can find most of my projects on my GitHub.

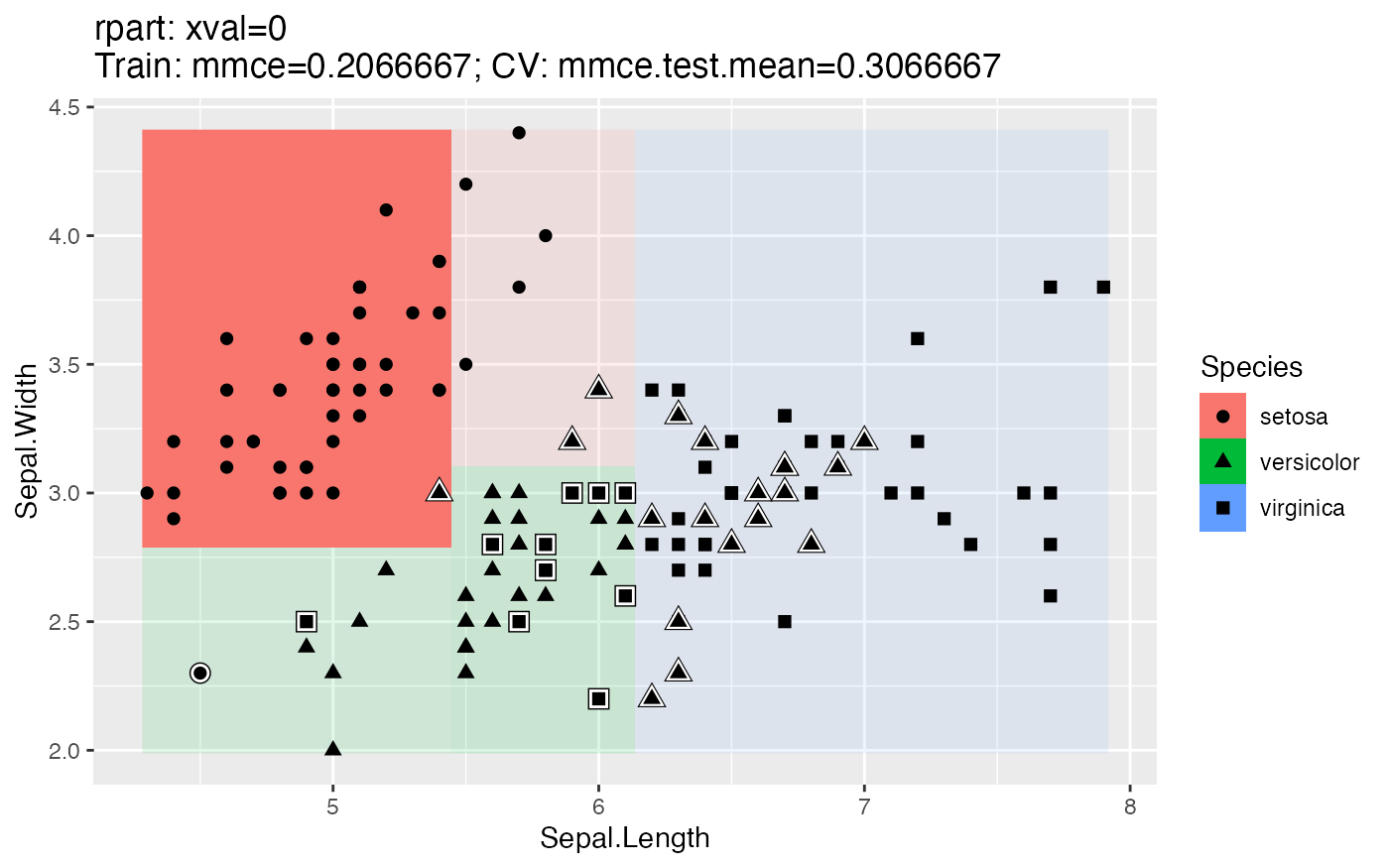

mlr

Co-author of the widely used machine learning library for R that includes over 80 classification methods and over 50 regression methods. mlr also offers hyperparameter optimization, as well as pre- and post-processing methods. I implemented many learners, Bayesian optimization for tuning, fixed various bugs, took care of unit tests, documentation and code-reviews.

mlr3 and mlr3verse

Co-author of the successor of mlr. I contributed to the early development and new object-oriented design of mlr3. The mlr3verse consist of various R packages that are developed in a big team across universities. I largely commit on doing code-reviews and helping others to contribute to the project. My main contributions are to the packages related to tuning mlr3tuning, bbotk and mlr3mbo.

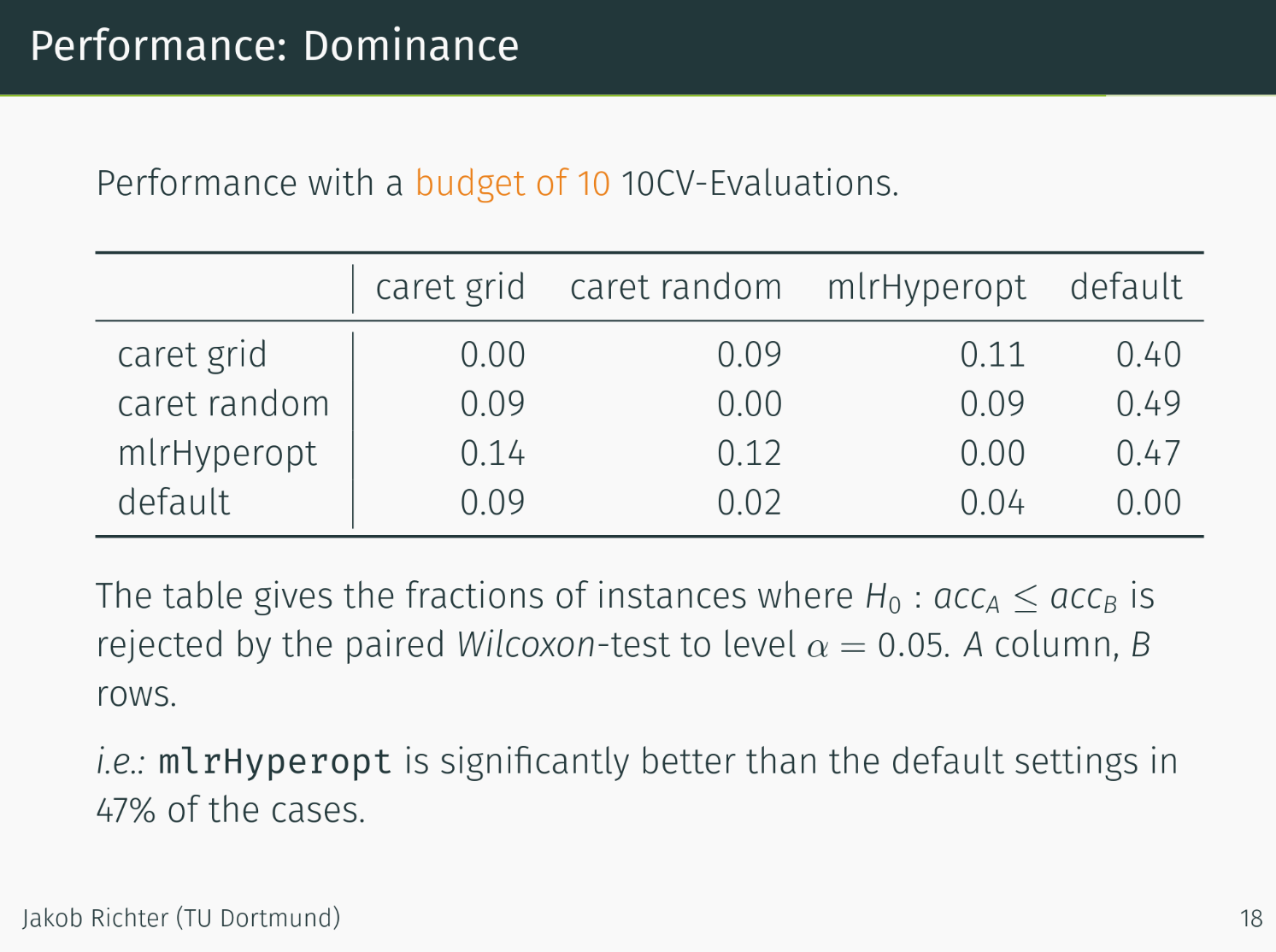

mlrHyperopt

With this small side-project I simplified hyperparameter tuning within mlr by defining a heuristic that choses the appropriate search space and optimizer for a given machine learning algorithm and dataset. More details in my blog post on r-bloggers and my talk at the international useR! conference 2017.

mlrMBO

Maintainer and co-author of the model-based optimization toolkit for R: mlrMBO. This R package offers various Bayesian optimization methods and includes parallelization as well as multi-criteria optimization. Most of my research is implemented in this package and partly resides in development-branches. You can see my presentation of mlrMBO at the useR! conference 2016 in Stanford here.

Publications

Here are some selected publications. An up-to-date and complete list can be found on my Google Scholar profile.

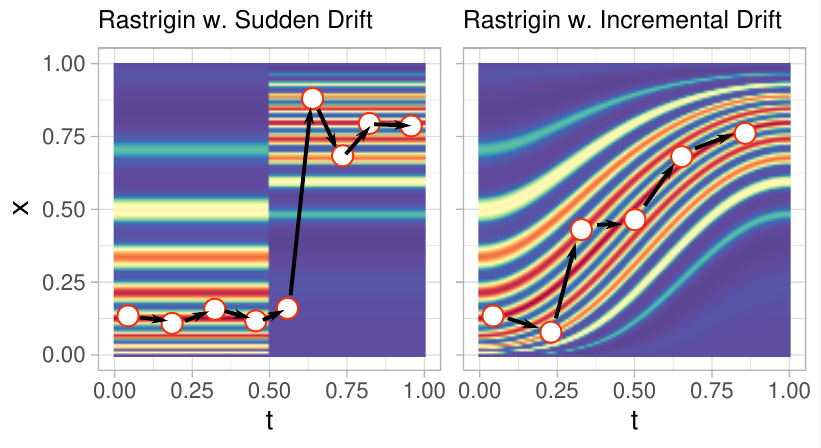

Conference Paper - Model-based Optimization with Concept Drifts

This paper shows an straight-forward approach on how to apply Model-based optimization (Bayesian optimization) on problems that change systematically over time. This approach could be used to optimize the hyperparameters of an online machine learning algorithm.

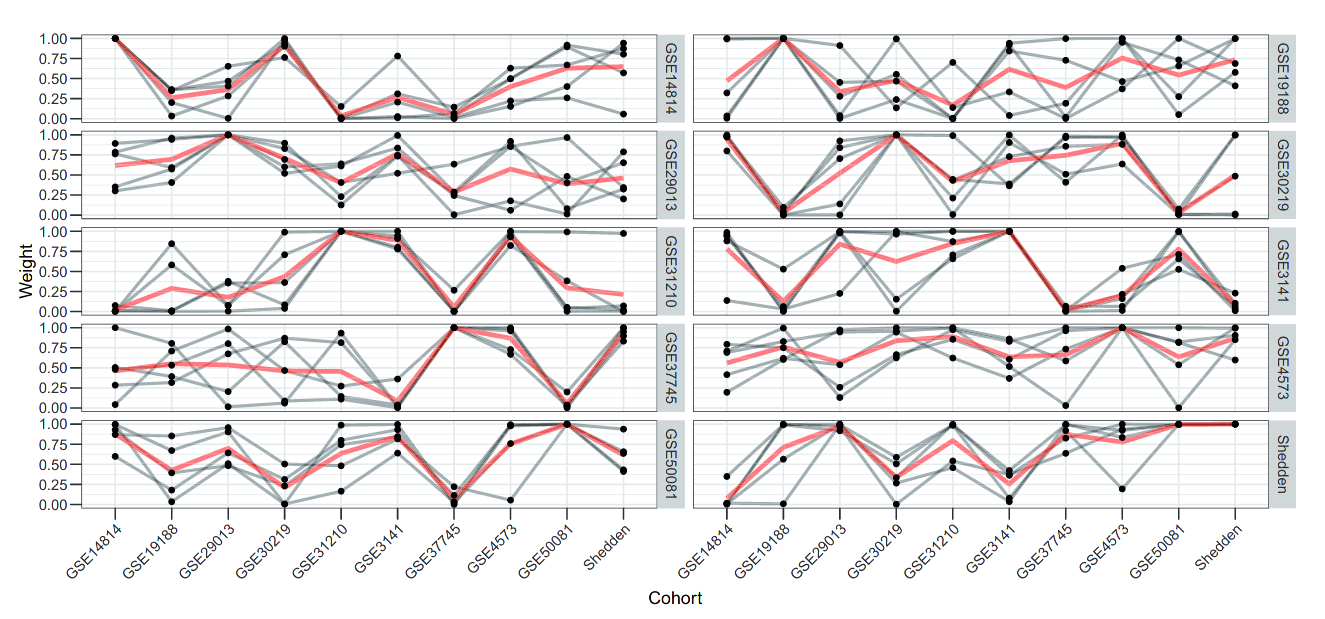

Conference Paper - Model-based optimization of subgroup weights for survival analysis

This paper successfully applied model-based optimization to identify optimal subgroup weights for survival prediction with high-dimensional genetic covariates. When predicting survival for a specific subgroup it can be beneficial to combine the training data with other subgroups to obtain a better model.

Preprint - mlrMBO: A modular framework for model-based optimization of expensive black-box functions

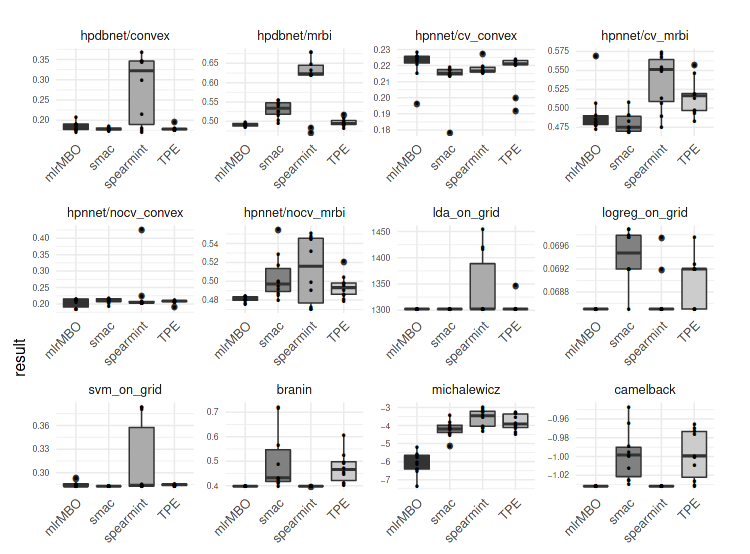

This is the accompanying paper for the mlrMBO R package. It gives a brief introduction the model-based optimization algorithm and shows that mlrMBO is able to outperform other optimization packages on synthetic test functions as well as on real hyperparameter optimization problems.

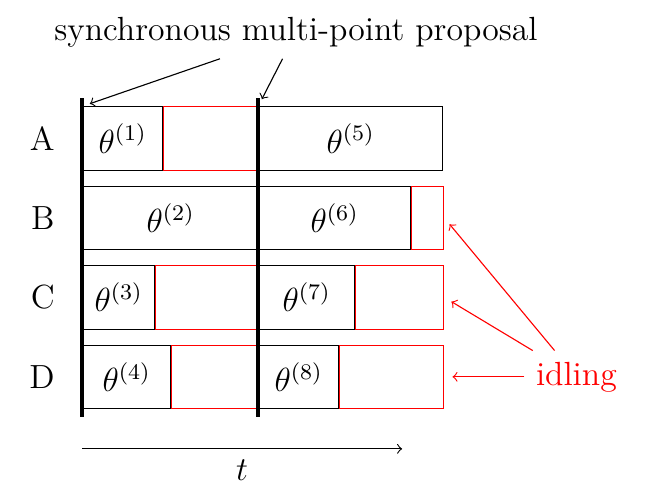

Conference Paper - Faster model-based optimization through resource-aware scheduling strategies

This paper shows one way to parallelize model-based optimization on the example of tuning a SVM. The challenge of heterogeneous runtimes that can cause unused CPU resources is tackled by using scheduling strategies on predicted runtimes. We were able use the resources more efficiently which led to better tuning results. We improved our scheduling strategy in the follow up paper Rambo: Resource-aware model-based optimization with scheduling for heterogeneous runtimes and a comparison with asynchronous model-based optimization.

Journal Paper - mlr: Machine Learning in R

This paper is just our formal publication mlr. More details can be found in the detailed online tutorial here. Please note that we advice to use mlr3 instead which comes along with a in-depth documentation in online book form.

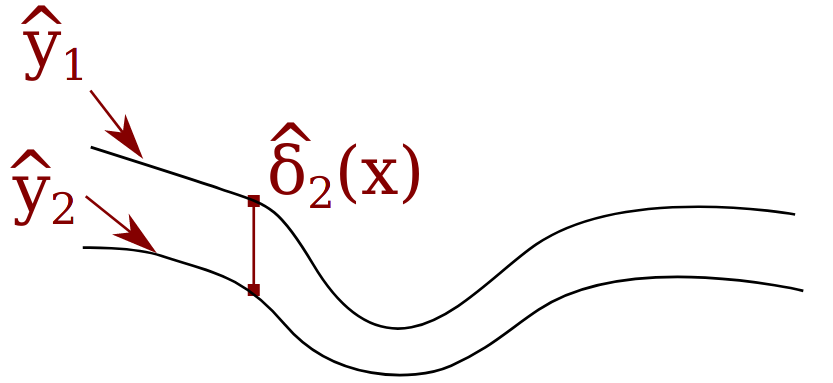

Master Thesis - Model-based Hyperparameter Optimization for Machine Learning Algorithms on Large Datasets

In my master thesis I developed model-based approach to multi-fidelity optimization. This approach allows to find promising hyperparameter configurations faster by evaluating the ml algorithm on small samples of the data.

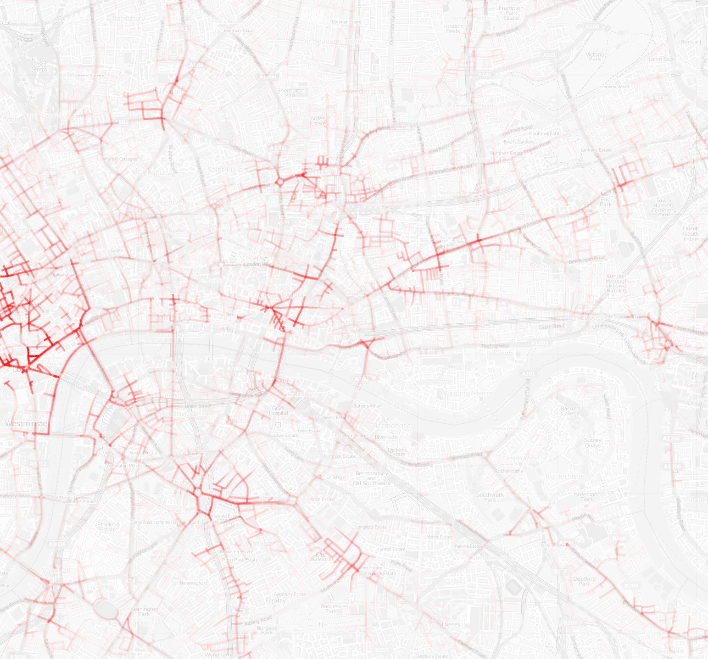

Bachelor Thesis, Conference Talk - Analyzing geo data on a street level performed on an example of road accidents in London

In my bachelor thesis I developed an algorithm that was able to map observations that were given by latitude and longitude data to the according street segment. This yields a much more insightful visualization that a normal heat-map or individual points.

Projects

Dortmunder R Kurse

I give courses for beginners and advanced R programmers for clients such as BASF, Siemens and AOK. Advanced topics include automatic reporting, Shiny, and machine learning.Lecture: Automatic Machine Learning

Organization of exercises, student discussions and exam.Bayesian Optimization as a Service

Supervised a student to implement mlrMBO as a REST-service which can be run in a Docker container. Similar to Sigopt, just free and self-hosted.DataMining Cup

Our TU Dortmund Teams won first and second place on predicting whether an online order will be returned.Experience

Postdoctoral Researcher

Research on application of Bayesian optimization on dynamically changing problems.

Postdoctoral Researcher

Member of the Automated Machine Learning research group, research on multi-objective optimization, lecture on automated machine learning, and working on open source projects for machine learning in R: mlr3, mlr3tuning, mlr3hyperband.

Researcher

Finished my PhD, Research on parallelization for Bayesian optimization, hyperparameter optimization for expensive machine learning problems, and worked on various open source projects for machine learning in R: mlr, mlrMBO, mlrHyperopt.

Student Assistant

Connected multiple machine learning methods to the R-package mlr.

Student Assistant

Implemented a system to create automated reports based on current research surveys, that were used in the final publication TIMSS 2011.